The famous linguist and philosopher wrote an article with two specialists in which he shares his vision of the progress made in the field of artificial intelligence. Here are the reasons why Noam Chomsky views the technology of programs like ChatGPT, among others, with skepticism.

For those interested in the technology field, artificial intelligence (AI) applications such as ChatGPT and DALL-E have become a trend. Not only because they are able to create texts or images themselves from simple ideas that users give them, but also because some use them to support their daily activities.

Many specialists and even the manager of both, Sam Altman from OpenAI they warned that These platforms still have errors so it is not advisable to trust anything they reproduce after collecting large amounts of data from the Internet.

Along with this, these systems have also raised concerns among some Internet users. For example, a few months ago, news went viral that Microsoft search engine chatbot Bing had told a New York Times reporter: He would like to be human, “make a deadly virus” and commit a series of “destructive acts” .

Microsoft’s artificial intelligence and its controversial confessions

Shortly after, a columnist from the same American newspaper published an article in which he explained why these programs fall into such extremist and controversial statements a scenario reinforced by the large number of fake news and troublemakers in the digital world.

Opinions regarding AI, currently accessible to the general public, are varied, although its own creators generally communicate that they are tools in development that could be useful for more assertive and advanced tasks in the future.

But considering that these applications attempt to simulate the work and conversations of human beings, can we say that their intelligence is similar – at least now – to that of humans?

The famous linguist and philosopher Noam Chomsky wrote an article on this subject in New York Times in which, with the support of his colleague Ian Roberts and AI expert Jeffrey Watumull, he deciphered the keys to this question.

Noam Chomsky: his alarming vision of Artificial Intelligence ChatGPT

For the academic and his collaborators, the “allegedly revolutionary” advances presented by AI developers are a source of “optimism and concern” .

On the first side, because they can be useful in solving certain problems, while on the second, because they “We fear that the most popular and fashionable variety of artificial intelligence (machine learning) is degrading our science and degrading our ethics by incorporating into the technology a fundamentally flawed conception of language and knowledge. » .

The goals of Sam Altman, the artificial intelligence entrepreneur behind ChatGPT and DALL-E

Although they recognize that they are efficient at the task of storing immense amounts of information – which is not necessarily true – they do not possess “intelligence” like that of people.

“As useful as these programs may be in certain specific areas (like computer programming, for example, or for suggesting rhymes for light verse), We know from linguistic science and the philosophy of knowledge that they differ profoundly from the way human beings reason and use language. “, they warned, “These differences impose significant limits on what they can do, encoding them with inexpressible flaws. “.

In this sense, they detailed that unlike application engines such as ChatGPT —which work on the basis of collecting a lot of data—, The human mind can operate with small amounts of information, through which “it does not seek to infer crude correlations between points (…) but rather to create explanations” .

The “critical capability” of AI-based programs

To support this premise, they illustrated the case of children when learning a language, a scenario in which, based on the little knowledge they have, they manage to establish relationships and logical parameters between words and sentences.

“This grammar can be understood as an expression of the innate, genetically installed ‘operating system,’ which gives human beings the ability to generate complex sentences and long trains of thought,” they said, later adding that “It is completely different from that of a machine learning program.

In this sense, they argue that these applications are not really “smart” because they lack critical capacity. Although they can describe and predict “what is”, “what was” and what will be, they are not able to explain “what is not” and “what could not be”.

What Chile would look like in 2023 if the Spanish hadn’t arrived 500 years ago, according to artificial intelligence

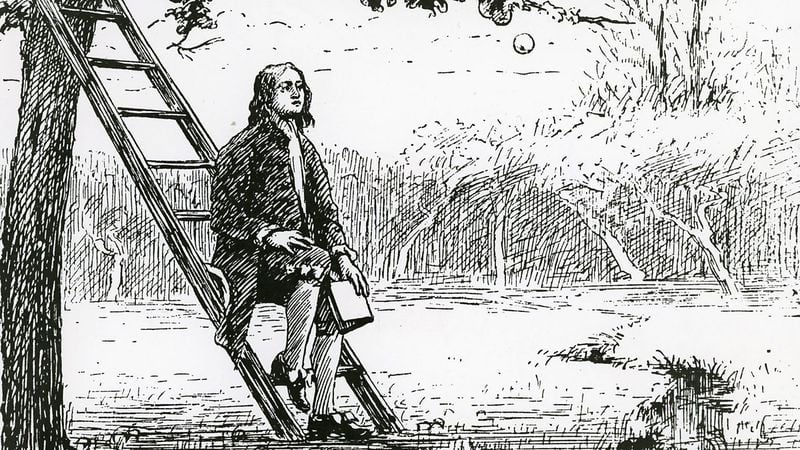

“Suppose you have an apple in your hand. Now release it, observe the result and say: “The apple falls.” It’s a description. A prediction could have been the statement: “It will fall if I open my hand.” Both are valuable and may be correct. But an explanation is something more: it includes not only descriptions and predictions, but also counterfactual conjectures such as “any such object would fall”, plus the additional clause “because of the force of gravity » or “because of the curvature of space”. ‘ time’ “.

In this way, they added that “it is a causal explanation: ‘the apple would not have fallen without the force of gravity’ (…) it is thinking.”

And while people can also make mistakes in our reasoning, they pointed out that Error is part of thinking, because “to be right, you have to be able to be wrong.” .

“ChatGPT and similar programs are, by design, unlimited in what they can “learn” (i.e. memorize); They are unable to distinguish the possible from the impossible. Unlike humans, for example, who are equipped with a universal grammar that limits the languages we can learn to those that possess a certain kind of almost mathematical elegance, these programs learn humanly possible and humanly impossible languages with equal ease. . “.

The moral perspective of artificial intelligence

Another factor considered by Chomsky, Roberts and Watumull in their analysis is that AI systems lack reasoning from a moral perspective, so they are unable to distinguish, within ethical frameworks, what should or should not be done. .

What is the true image of Jesus, according to AI

For them, it is essential that ChatGPT’s results are “acceptable to most users” and that it stays “away from morally reprehensible content” (such as the Bing chatbot’s claims of “destructive acts”).

And although the developers of these technologies have added restrictions so that their programs do not reproduce these types of statements, academics have pointed out that until now it has not been possible to achieve an effective balance . In his words, They sacrifice creativity for “a kind of amorality” that further distances them from the capabilities of human beings. .

“In short, ChatGPT and its brethren are constitutively incapable of balancing creativity and restriction. They either overgenerate (producing both truths and lies, supporting ethical and unethical decisions) or undergenerate (showing a lack of commitment to any decision and indifference to the consequences). “, they said.

Source: Latercera

I’m Rose Brown , a journalist and writer with over 10 years of experience in the news industry. I specialize in covering tennis-related news for Athletistic, a leading sports media website. My writing is highly regarded for its quick turnaround and accuracy, as well as my ability to tell compelling stories about the sport.